Control UIs using wireless earbuds and on-face interactions

10/09/2023A few weeks ago, I stumbled upon a very cool research project around using the sound of touch gestures on your face to create new interactions with interfaces.

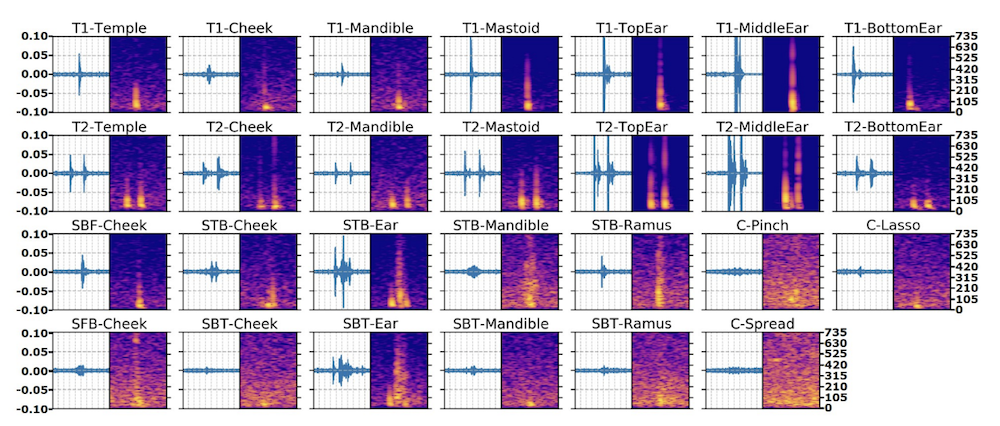

This research uses the microphone placed in bluetooth earphones to record the subtle sounds made by movements of your fingers on your face, for example tapping your cheekbone or swiping your finger down your jaw. When displayed on a spectrogram, each sound shows a relatively unique signature. After recording enough samples, a machine learning model can be trained to automatically classify live data and map it to these gestures, allowing them to be used as new way of interacting.

Below is an example of the spectrograms from the research.

After reading the paper, I decided to try and recreate something similar using JavaScript. I've experimented with using sound data and machine learning in the past and the result was quite successful, however, I had never thought about working with more subtle sounds like the ones this research is focusing on.

Demo

Below is an example of the outcome of my experiment where I show that I'm scrolling a webpage by tapping my cheekbone.

Tools

To build this prototype, I decided to keep it as simple as I could and decided to use:

- My Apple Airpods (1st generation)

- Standard JavaScript (no need for frameworks)

- TensorFlow.js and their speech commands model

Training the model

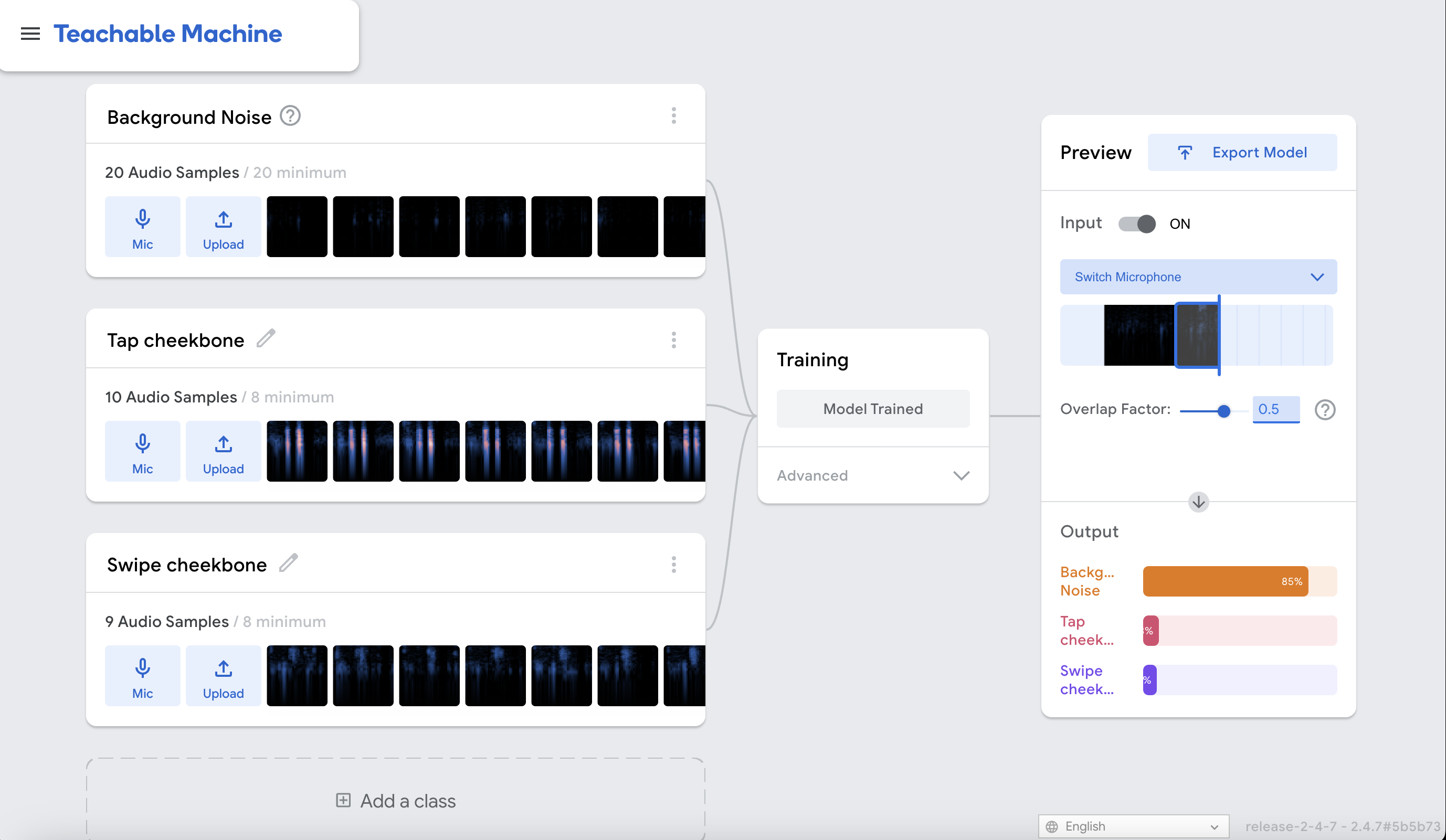

I used the Teachable Machine platform to quickly train my model. This side project is only a prototype to validate if this idea is feasible so I didn't try to make it extremely accurate. The research mentions that participants recorded more than 14,000 samples and it would have taken me a long time to do this on my own.

Teachable Machine allows you to use transfer learning on an existing model so I used their sound recognition feature and spent some time recording samples for 4 different gestures:

- Tap cheekbone

- Tap jaw

- Swipe cheekbone

- Swipe jaw

Below is a screenshot of what Teachable machine looks like once sound samples are recorded and spectrograms are displayed.

In a few minutes, my model was ready to be exported and implemented in a demo app.

Implementation

Once the model is exported, it can be implemented in a few lines of code.

Initial setup

First, the only package needed is the speech-commands model.

import * as speechCommands from "@tensorflow-models/speech-commands";To start using the microphone on a web page, there needs to be a user action, here a click on a button to start the detection. Then, we also need the URL to the hosted model.

const btn = document.querySelector("button");

const CUSTOM_MODEL_URL = "https://teachablemachine.withgoogle.com/models/${your-model-ID}/"; Loading the model

Before being able to start the detection, the model needs to be loaded using its model.json and metadata.json files, passed as parameters to speechCommands.create.

async function loadModel() {

const modelURL = CUSTOM_MODEL_URL + "model.json"; // model topology

const metadataURL = CUSTOM_MODEL_URL + "metadata.json"; // model metadata

const recognizer = speechCommands.create(

"BROWSER_FFT",

undefined, // speech commands vocabulary feature, not useful here

modelURL,

metadataURL

);

await recognizer.ensureModelLoaded();

return recognizer;

}Starting the recognition

Once the model is loaded, the application should be ready to start listening to live audio input data and the model should be able to classify it between the different gestures it has been trained with.

const startRecognition = async () => {

const recognizer = await loadModel();

// Get the labels the model was trained with.

const classLabels = recognizer.wordLabels();

// start listening to live audio data

recognizer.listen((result) => {

// result.scores returns an array with a probability score for each gesture

const scores = result.scores;

// Here, I'm getting the index of the gesture that is the most likely to have been detected

const predictionIndex = scores.indexOf(Math.max(...scores));

// Using this index, I get the predicted gesture label.

const prediction = classLabels[predictionIndex];

// Here is the fun part where you can decide the types of interactions you want

if (prediction === 'tap cheekbone') {

console.log('swipe up')

} else if (prediction === 'swipe jaw') {

console.log('swipe down')

}

},

{

includeSpectrogram: false,

probabilityThreshold: 0.8,

invokeCallbackOnNoiseAndUnknown: true,

overlapFactor: 0.75,

});

};

btn.onclick = () => startRecognition();Interactions

Once the detection is implemented, it's time for the fun part! Thinking about interactions is where the real creative work comes in.

Depending on your earphones, some might have a few interactions already built-in, for example, Apple's Airpods can play/pause a song or answer a call when the user taps on one of them. Therefore, what other interactions would be useful for users?

It's useful to think about what this technology provides that others might not. Here's a few benefits of using on-face interactions:

- They can be used when the user needs to interact in a quiet way, compared to voice interfaces.

- They allow users to be able to move around, compared to a mouse or keyboard for example.

- They're personal and customizable.

From these benefits, we can start thinking about useful applications.

The first idea I implemented to verify that it worked was to scroll down by tapping my cheekbone.

Even though the demo above shows it working, I didn't think it was the most exciting or useful interaction, at least not in front of my laptop. However, you could imagine yourself sitting on your couch, far from your TV and be able to scroll the menu of your smart TV by swiping down on your face, no need for any remote.

What about screenless interfaces? Could there be interesting interactions to implement when your phone is in your pocket? For example, you could launch certain apps using custom gestures. A swipe down on your cheek could read you the news, a double tap on your jaw could launch your future custom AI agent. 🤖

A more realistic use case could be a remote controller for your camera! If you're taking a picture or recording a video, you could use these gestures to trigger the timer or zoom in from a distance.

There's definitely a lot more interactions that could be implemented. A nice creative exercise is to look at all the devices and interfaces you're already controlling one way or another, and think about how you could replace or enhance any of these using this technology.

Conclusion

Considering that my initial goal was to validate that this project could be recreated using JavaScript, I'm happy to say that it works! 🎉

It could be made more robust by recording more training samples and improving the accuracy of the predictions, but overall, I'm happy with the result as a prototype.

I also started this project with a few initial questions that I wanted to be able to answer.

Q&A

Does it work with any bluetooth earphones?

I tested it with AirPods 1st gen and it worked. I would assume that as long as the earphones have their microphones placed around the bottom of the device, it should be able to pick up the sound of gestures on people's skin. One thing to look out for is if your earbuds have a noise cancelling feature. My Airpods don't but I assume you would have to turn off the feature for them to be able to pick up such subtle sounds.

Does it work with background noise?

According to the research paper, it does, however, with the model trained using Teachable Machine, it doesn't really.

What other gestures are available?

In the research, they showcase gestures on different parts of the ear, however, they personally didn't work in my prototype. This could be due to the fact that I did not record enough samples for the model to detect them accurately.

Does it work when you play music?

Yes! The microphone doesn't seem to pick up the music it's playing so it's able to detect the sound made by the gestures.

Would it be able to connect only to web apps?

This prototype is built using JavaScript so on a computer, it can be implemented in a web app, Chrome extension, Electron app, and on a mobile phone, it could be implemented in a Progressive Web App.

To interact with another device such as a smart TV, it would probably need to be implemented in Swift, Kotlin or Java, depending on the TV.